1 Introduction

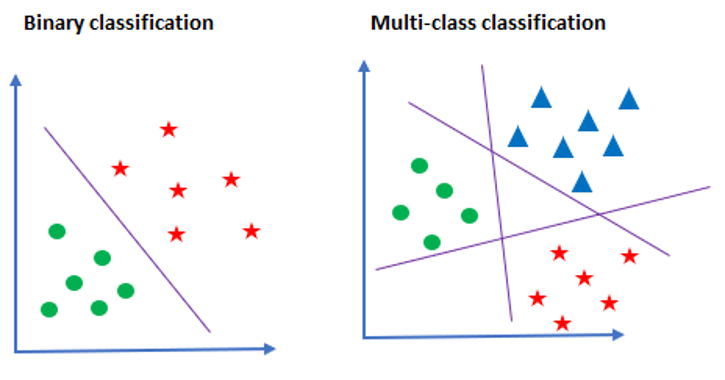

We already know from my previous posts how to train a binary classifier using “Logistic Regression” or “Support Vector Machines”. We have learned that these machine learning algorithms are strictly binary classifiers. But we can also use this for multiple classification problems. How we can do this will be explained in the following publication.

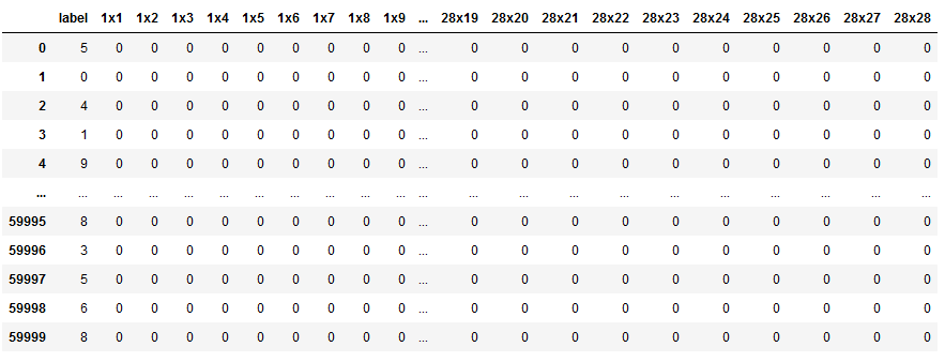

For this post the dataset MNIST from the statistic platform “Kaggle” was used. A copy of the record is available at https://drive.google.com/open?id=1Bfquk0uKnh6B3Yjh2N87qh0QcmLokrVk.

2 Background information on OvO and OvR

First of all, let me briefly explain the idea behind One-vs-One and One-vs-Rest classification. Say we have a classification problem and there are N distinct classes. In this case, we’ll have to train a multiple classifier instead of a binary one.

But we can also force python to train a couple of binary models to solve this classification problem. In Scikit Learn we have two options for this, which are briefly explained below.

One-vs-One (OvO)

Hereby the number of generated models depending on the number of classes where N is the number of classes.

If N is 10 as shown in our example below the total of the learned model is 45 according to the mentioned formula. In this method, every single class will be paired one by one with other class. At the end of the classification training, each classification is given one vote for the winning class. The highest votes will determine which class the test dataset belongs to.

One-vs-Rest (OvR)

Unlike One-vs-One, One-vs-Rest produced the same amount of learned models with the number of classes. Is this (as in the example below) 10, the number of learned models is also 10. In this method, every class is paired with the remaining classes.

The only thing we really have to do now compared to multiple classifiers is to run N binary classifiers from just one. And that’s it.

3 Loading the libraries and the data

import numpy as np

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from sklearn.multiclass import OneVsRestClassifier

from sklearn.multiclass import OneVsOneClassifier

from sklearn.linear_model import LogisticRegression

from sklearn.svm import SVC

from sklearn.model_selection import GridSearchCVmnist = pd.read_csv('path/to/file/mnist_train.csv')

mnist

x = mnist.drop('label', axis=1)

y = mnist['label']

trainX, testX, trainY, testY = train_test_split(x, y, test_size = 0.2)4 OvO/OvR with Logistic Regression

Using OvO / OvR is fairly simple. See the usual training procedure here with Logistic Regression:

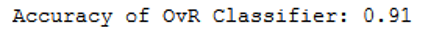

4.1 One-vs-Rest

OvR_clf = OneVsRestClassifier(LogisticRegression())

OvR_clf.fit(trainX, trainY)

y_pred = OvR_clf.predict(testX)

print('Accuracy of OvR Classifier: {:.2f}'.format(accuracy_score(testY, y_pred)))

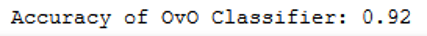

4.2 One-vs-One

OvO_clf = OneVsOneClassifier(LogisticRegression())

OvO_clf.fit(trainX, trainY)

y_pred = OvO_clf.predict(testX)

print('Accuracy of OvO Classifier: {:.2f}'.format(accuracy_score(testY, y_pred)))

4.3 Grid Search

We even can use grid search to determine optimal hyperparameter:

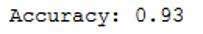

OvR

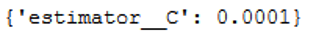

tuned_parameters = [{'estimator__C': [100, 10, 1, 0.1, 0.01, 0.001, 0.0001]}]

OvR_clf = OneVsRestClassifier(LogisticRegression())

grid = GridSearchCV(OvR_clf, tuned_parameters, cv=3, scoring='accuracy')

grid.fit(trainX, trainY)print(grid.best_score_)

print(grid.best_params_)

grid_predictions = grid.predict(testX)

print('Accuracy: {:.2f}'.format(accuracy_score(testY, grid_predictions)))

OvO

tuned_parameters = [{'estimator__C': [100, 10, 1, 0.1, 0.01, 0.001, 0.0001]}]

OvO_clf = OneVsOneClassifier(LogisticRegression())

grid = GridSearchCV(OvO_clf, tuned_parameters, cv=3, scoring='accuracy')

grid.fit(trainX, trainY)print(grid.best_score_)

print(grid.best_params_)

grid_predictions = grid.predict(testX)

print('Accuracy: {:.2f}'.format(accuracy_score(testY, grid_predictions)))

5 OvO/OvR with SVM

The same procedure works with SVM as well.

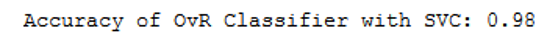

5.1 One-vs-Rest

OvR_SVC_clf = OneVsRestClassifier(SVC())

OvR_SVC_clf.fit(trainX, trainY)

y_pred = OvR_SVC_clf.predict(testX)

print('Accuracy of OvR Classifier with SVC: {:.2f}'.format(accuracy_score(testY, y_pred)))

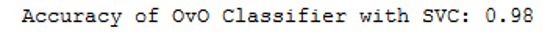

5.2 One-vs-One

OvO_SVC_clf = OneVsOneClassifier(SVC())

OvO_SVC_clf.fit(trainX, trainY)

y_pred = OvO_SVC_clf.predict(testX)

print('Accuracy of OvO Classifier with SVC: {:.2f}'.format(accuracy_score(testY, y_pred)))

5.3 Grid Search

GridSearch also works with this method:

OvR

tuned_parameters = [{'estimator__C': [0.1, 1, 10, 100, 1000],

'estimator__gamma': [1, 0.1, 0.01, 0.001, 0.0001],

'estimator__kernel': ['linear']}]

OvR_SVC_clf = OneVsRestClassifier(SVC())

grid = GridSearchCV(OvR_SVC_clf, tuned_parameters, cv=3, scoring='accuracy')

grid.fit(trainX, trainY)OvO

tuned_parameters = [{'estimator__C': [0.1, 1, 10, 100, 1000],

'estimator__gamma': [1, 0.1, 0.01, 0.001, 0.0001],

'estimator__kernel': ['linear']}]

OvO_SVC_clf = OneVsOneClassifier(SVC())

grid = GridSearchCV(OvO_SVC_clf, tuned_parameters, cv=3, scoring='accuracy')

grid.fit(trainX, trainY)