1 Introduction

One of the points to remember about data pre-processing for regression analysis is multicollinearity. This post is about finding highly correlated predictors within a dataframe.

For this post the dataset Auto-mpg from the statistic platform “Kaggle” was used. You can download it from my GitHub Repository.

2 Loading the libraries and the data

import pandas as pd

import numpy as np

import matplotlib

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.model_selection import train_test_split

from sklearn.feature_selection import VarianceThresholdcars = pd.read_csv("path/to/file/auto-mpg.csv")3 Preparation

# convert categorial variables to numerical

# replace missing values with columns'mean

cars["horsepower"] = pd.to_numeric(cars.horsepower, errors='coerce')

cars_horsepower_mean = cars['horsepower'].fillna(cars['horsepower'].mean())

cars['horsepower'] = cars_horsepower_meanWhen we talk about correlation it’s easy to get a first glimpse with a heatmap:

plt.figure(figsize=(8,6))

cor = cars.corr()

sns.heatmap(cor, annot=True, cmap=plt.cm.Reds)

plt.show()

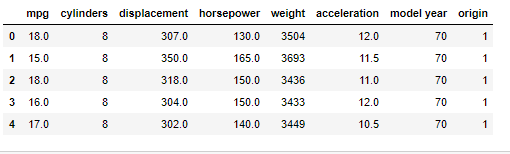

Definition of the predictors and the criterion:

predictors = cars.drop(['mpg', 'car name'], axis = 1)

criterion = cars["mpg"]predictors.head()

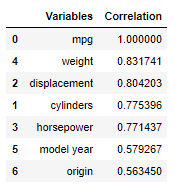

4 Correlations with the output variable

To get an idea which Variables maybe import for our model:

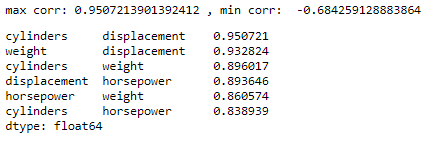

threshold = 0.5

cor_criterion = abs(cor["mpg"])

relevant_features = cor_criterion[cor_criterion>threshold]

relevant_features = relevant_features.reset_index()

relevant_features.columns = ['Variables', 'Correlation']

relevant_features = relevant_features.sort_values(by='Correlation', ascending=False)

relevant_features

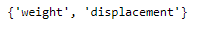

7 Conclusion

This post has shown, how to identify highly correlated variables and exclude them for further use.